Most of the write-ups on Apple’s newest addition to the ecosystem are fairly critical, or at least skeptical. There are countless reviews and endless opinions on the now two-year-old wireless earbuds. Most focus on sub-par sound quality, the price point, or Apple’s coercion tactics in creating the ultimate Apple fanboy. My biggest complaint: I don’t know what charge the case has unless the AirPods are docked. That communication wasn’t included in the first edition wireless earbuds.

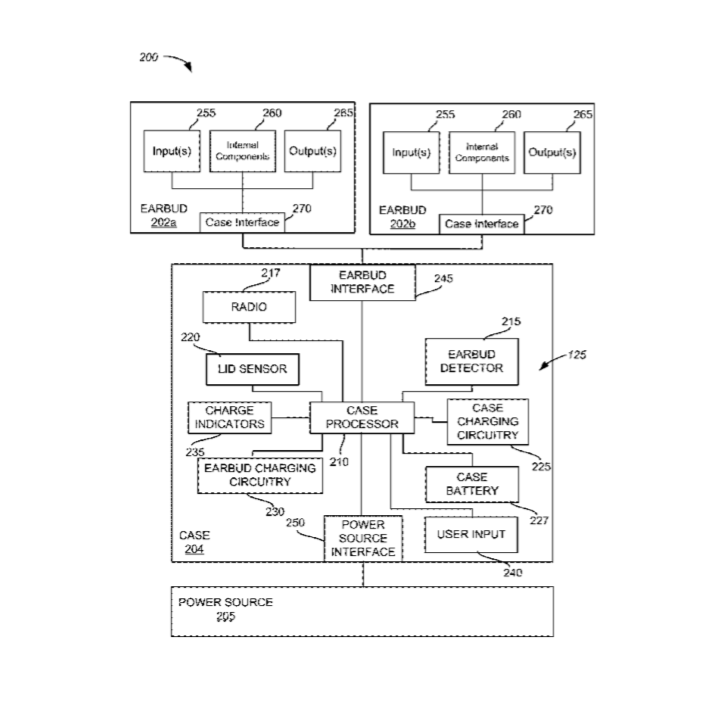

Apple is making moves though. The company recently patented a new version of the earbuds to be released later in 2019. Mostly speculation, patents suggest that Apple is adding health and fitness capabilities to the buds. A dated trend to jump on, these are features of tech usually seen on your wrist and not in your ears.

Something obvious from the new patents — the AirPods retain their essential features. They probably won’t differ vastly from their predecessor. Certainly, they’ll carry improvements, like erasing chirality. No more matching that L or R to your L or R. Let me make my case though, AirPods have defined the way we want to interface with smart technology. It comes from feats of physical computing, creative UI, and trading novelty for practicality.

Physical Computing & the Growing Internet of Things

I won’t bore you with all the fancy technical details, but these might be terms you’re familiar with. They’re the bigger concepts behind the growing itinerary of smart home technology and the world of sensors. The Internet of Things, IOT, refers to cars and everyday technology with sensors and systems built in. They are feats of physical computing — devices that are able to take in and interpret data from the real world. The goal of all of these technologies is to connect everything seamlessly in a network. Then your fridge can tell you when you’re out of a particular food, through a notification on your phone. Or your lights can know when you walk through the door.

The customizable gesture features in the AirPods are a miracle we can thank shrinking technology for. A huge reason why something of this nature hasn’t come about in the last decade since ubiquitous wifi networks is because sensors were just too bulky to load into something as tiny as an earbud.

UI to Define the Future of UI

Apple’s AirPods define user experience and interface that exemplify an ideal version of Orwellian dreams. Where your dad might be afraid of his Google Home listening in on his conversations (like mine), there’s nothing to fear in the closed loop of interaction packed between your phone and Apple’s young wireless earbuds.

Technology is only as helpful as to how quickly you can grab your phone or open your laptop, until recently. More than the hands-free capabilities of AirPods, they allow a user to experience a seamless interface that blends in with their daily activities. This might seem obvious, but behind the scenes, performing a gesture on your AirPods is: A sensor talking to a system, talking to your phone. Apple might be having a bit of fun with all the sensors it can fit in the device. A magnetic sensor knows when you flip the lid to display some animations and charge stats on your phone screen. The buds know when they’re put in or taken out of your ears, to pause music or redirect sound. This is the essence of perfect UI — handing you useful information or feedback that you would look for or perform yourself.

This is something that I can’t find in Amazon’s Alexa device or the Google Home. Nor any other wireless headset. Or even smart lighting. They are great at being assistants and great at being “smart”, but terrible at blending into daily life. They require an exorbitant setup process. They often need troubleshooting. Did you want to add a new light or set of lights to your home? If you’re like me, you’re going to put it off for weeks because of all the rearranging, countless menus, extra equipment, and WiFi proximity issues included.

Practical Dreams

Nothing exemplifies a perfect UI today, especially in our smart devices. They are always changing to fit our needs, but the logistics behind the most practical features still remain a consumer’s dream. Perhaps in the future, a sensor could detect fluid near a port so it would close a hatch over it. It sounds like something out of the 1950’s idea of “the future,” but really, wouldn’t they think we’re weird and tacky for walking around with two white beans stuffed in our ears? The most useful concepts are the ones you can’t even fathom. Human beings haven’t interfaced with technology as something that brings us what we need, because we have to manually interact with the system by some physical means before it gives us feedback.

What will live on from AirPods is not the cute adornments, not the novel and tacky animations on your phone, and certainly not the health features (which are still not very accurate). What we can learn from AirPods is that the best thing about them is that we can use them and not know that they are there. They perform as an extension of the human body.